Ocular Dominance Plasticity Using Altered Reality

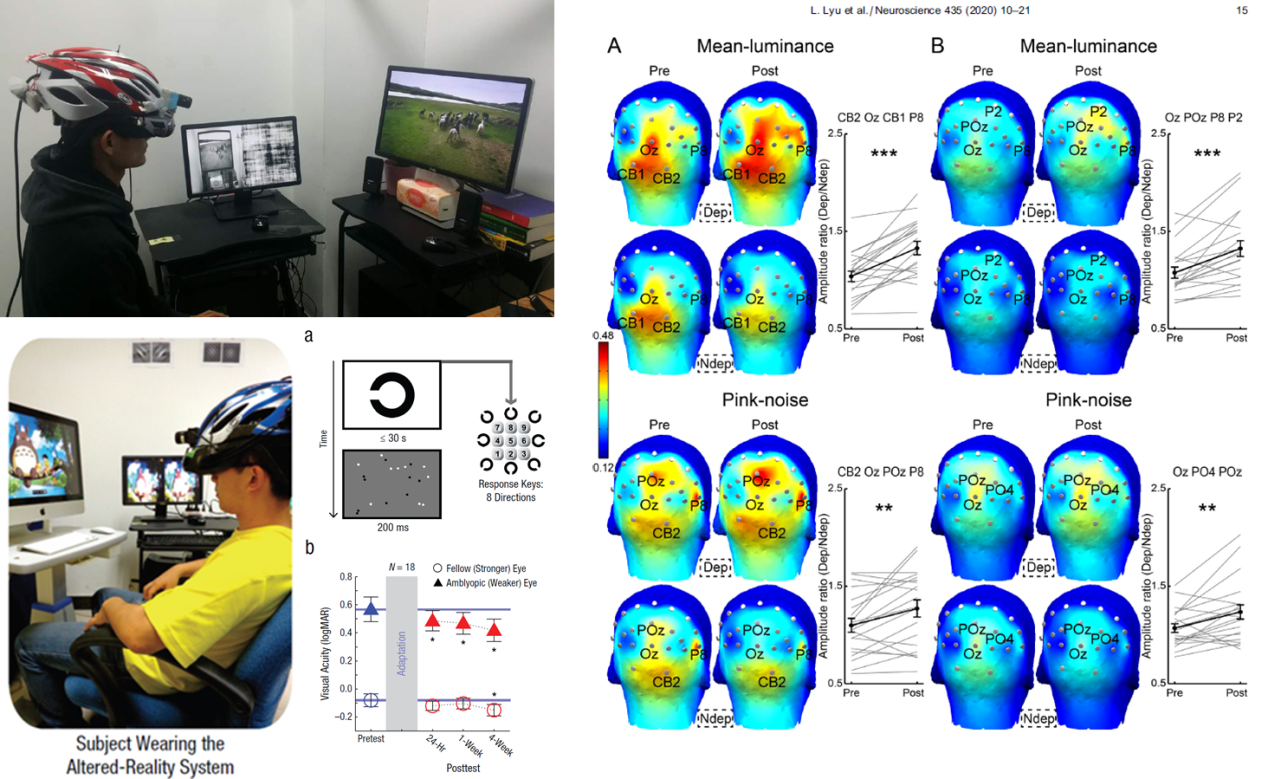

A traditional way to improve vision is perceptual training, which can produce large benefits for a wide variety of tasks in adults. However, frequent training sessions can be difficult to integrate into patients¡¯ life and work, which limits compliance. Our group has adopted a complementary approach¡ª allowing observers to perform everyday tasks in an environment that places demands upon mechanisms of plasticity. This long-term adaptation approach has been enhanced by the development and use of augmented reality (AR). Recent AR-based visual plasticity studies have used an HMD-based video see-through method. Unlike the typical AR method, in which digital content is overlaid upon the camera video of the world, in our visual plasticity studies global image processing is applied in real-time to the entire video to alter some feature of the images. We term this type of manipulation altered reality.

Using the altered reality approach, we can present designated dissimilar video images to each eye, and test the visual functional changes both psychophysically and neurophysiologically after the observers adapt to this altered environment for a few hours. We have also applied this technique to the treatment for visual impairment, e.g. amblyopia.

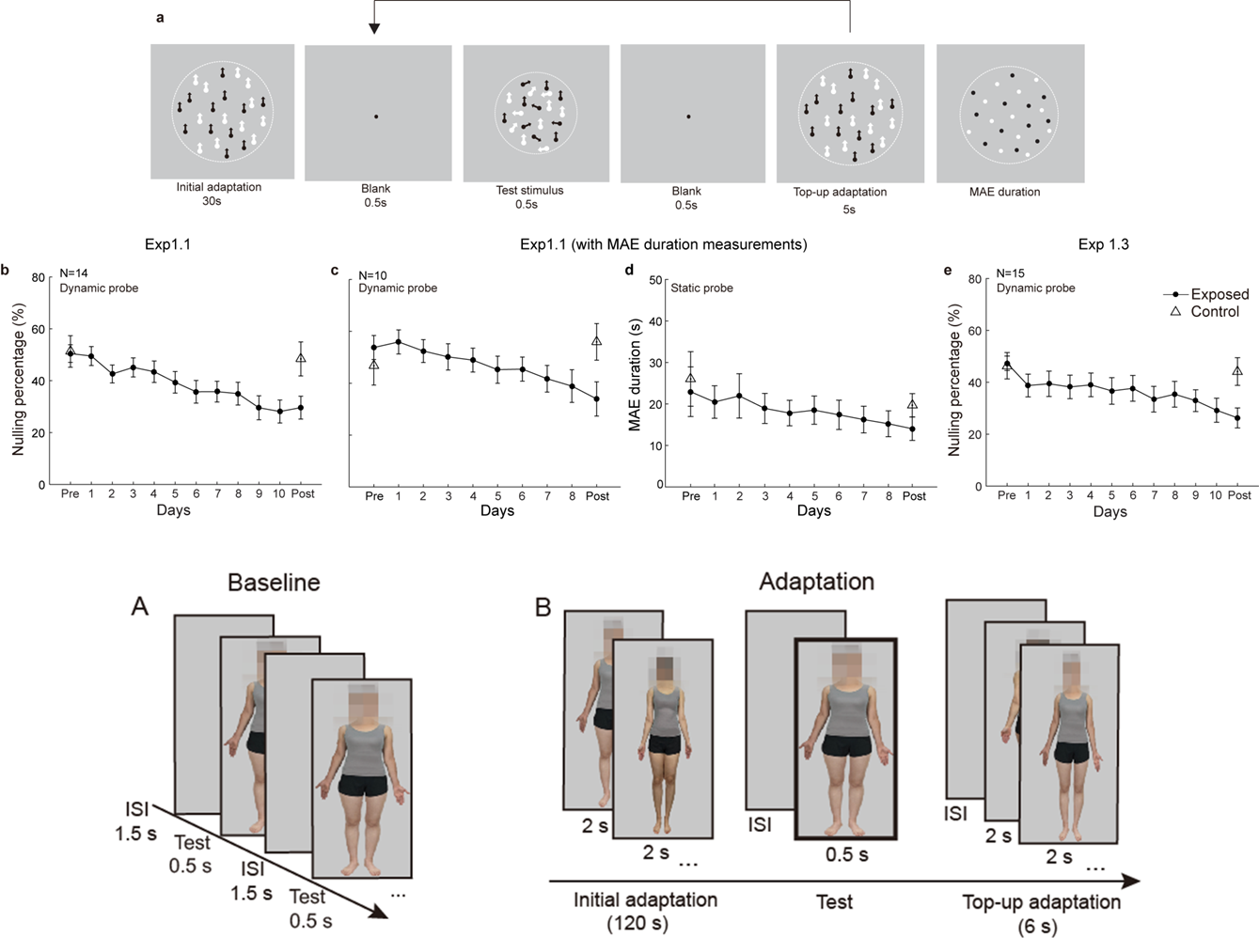

Visual Perception Shaped by Repeated Visual Adaptation

The visual system adjusts to changes in the environment. Such adaptive changes of the brain often accompany with distorted visual perceptions, i.e. adaptation aftereffect. For example, if you gaze at a waterfall for a period of time, and then look at a stationary rock, you may feel the rock is moving upward. Since life largely repeats over days for most people. That means the visual system frequently adapts to similar visual exposures. Is there any consequence of such repeated visual adaptation over the long term? By tracking the adaptation aftereffect across multiple daily sessions of adaptation, our previous work has shown that repeated visual adaptation to basic visual features, such as contrast and motion, can cause reduced adaptation aftereffect, which likely reflects habituation of the adaptation mechanism. More recently, we began to explore the effect of repeated adaptation to more complex visual stimuli.

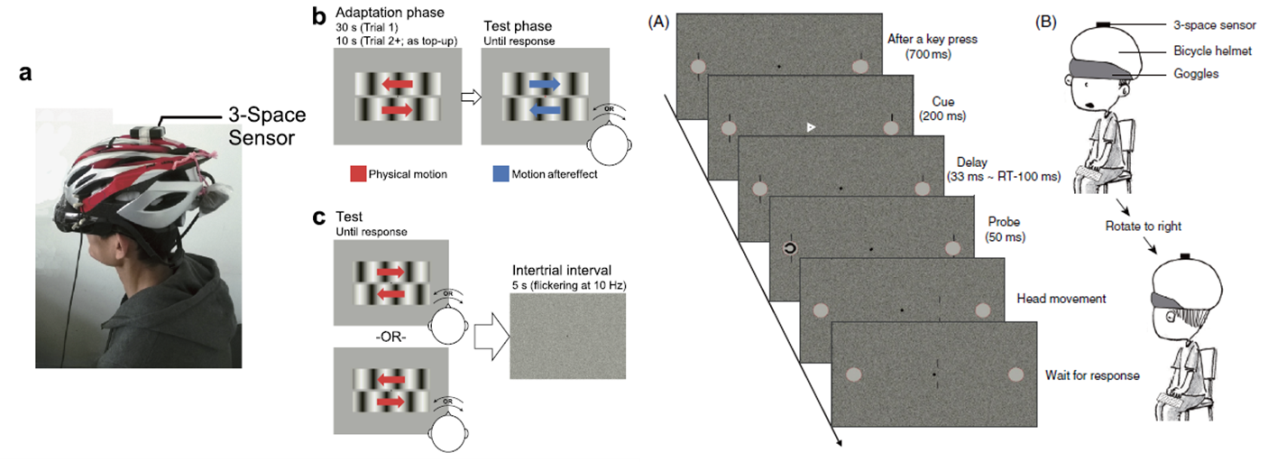

Visual-Vestibular Interactions Using Virtual Reality

Our recent studies on visual-vestibular interactions are mostly around a main topic¡ª the cross-modal bias hypothesis. Living in a multisensory environment leads the brain to develop an association between signals from the visual and vestibular pathways. We hypothesize that over one¡¯s own lifetime, the association becomes so strong that the signals from one modality could produce a bias signal in a congruent direction for the other modality. Given the intrinsic noises in neural responses, when the visual input signal is sufficiently weak and (or) uncertain, such a bias signal may easily manifest its perceptual outcome.

This hypothesis has been well supported by our findings of the vestibular modulation on the processing of motion aftereffect and head-rotation-induced flash-lag effect. Besides studying the influences of head movement on vision, we have also found that visual-vestibular interactions can occur even during the preparation of head rotation.